When we aren’t being treated, we’re being tested. We rely on testing almost as much as we rely on our drugs.

We wish these tests were all-seeing and all-knowing, but often they’re more like getting directions from a geezer on his front porch. Better accuracy is a never-ending research goal. Is a test using AI better than current diagnostics? How reliable is a vendor’s new PSMA PET scan? How reliable is my own PET scan which I’ve just received?

A test may not always tell the truth, but we can measure how likely it is to be lying.

Asking the right questions

What makes a test good?1

Well, duh, we want it to tell us we have cancer when we have cancer. We don’t want it to miss any.

This isn’t enough, though. We also want it not to tell us we have cancer when we don’t have cancer. We don't want it finding any that’s not there.

You might imagine that a test that does one of these automatically does the other, but it’s easy to show otherwise. Consider a test that always reports “Yes, there’s cancer.” It misses nothing. It returns positive even for the barest trace of disease. The test stinks, of course, because it’s also finding cancer when there is none.

Just as easily, we can construct a test that will never return a false alarm. It always reports “No cancer!” We don’t think much of this test either.

For a test to be perfect, positives and negatives both need to be correct. As we’ve seen, it’s possible for a test to excel in one while failing at the other. We need to measure a test both ways before deciding it’s a good test.

Sensitive and specific

The necessary qualities are summed up in the terms sensitive and specific. A sensitive test will always find the problem; a specific test makes no false accusations. These are like, on the witness stand, telling the whole truth (sensitive) and nothing but the truth (selective). You want both.

Sensitivity and specificity are reported as percentages. Here are precise definitions:

Sensitivity is the percentage of patients with disease who test positive. If sensitivity is perfect, this is 100%.

Specificity is the percentage of healthy patients who test negative — which is to say we don’t want any healthy patients testing positive. If specificity is perfect, this too is 100%.2

Predictive value

Don’t walk away yet, though. There’s a question this definition doesn’t answer: If my test returns a negative/positive result, what's the probability I actually am negative/positive? Perhaps surprisingly, the answers are not the same as the test’s sensitivity and selectivity.

Consider two questions:

If I have cancer, what’s the probability that my test is positive?

If my test is positive, what’s the probability that I have cancer?

To see how different those questions are, consider these instead:

If I’m in Seattle, what’s the probability that it’s raining?

If it’s raining, what’s the probability that I’m in Seattle?

With respect to lab results, it’s question 2, not question 1, that you need answered. This question has its own name: positive predictive value. (Question 1 is, in fact, sensitivity.)

Even more likely, you’re wondering whether cancer was missed (“If my test is negative, how much can I trust it?”). This is negative predictive value.

What’s the difference?

What is it that makes predictive values different from sensitivity and specificity?

In the Seattle context, the difference between questions is obvious. Question 2 is only partially dependent on Seattle; it also depends on Seattle’s relative size and the rainfall everywhere else.

Now put this into a medical setting. You want to know the probability of your test result being correct, but the probability depends in part on how common a condition is. If the condition being tested for is rare, negative predictive value gets a free ride — most negative test results will be correct (NPV high) even if a test returns many false negatives (poor sensitivity). If the condition is common, positive predictive value gets a free ride — most positive results will be correct (PPV high), even if the test itself gives many false positives (poor specificity).

It may seem frustrating, but the external factor of prevalence (how common a disease is) inevitably controls the probability of your results being correct.

AUC

To describe the quality of a new test, a paper will sometimes report a single number, the AUC. A test with an AUC of 1.0 has perfect sensitivity and specificity; the closer the AUC is to 1.0, the better the test.3

ExoDx

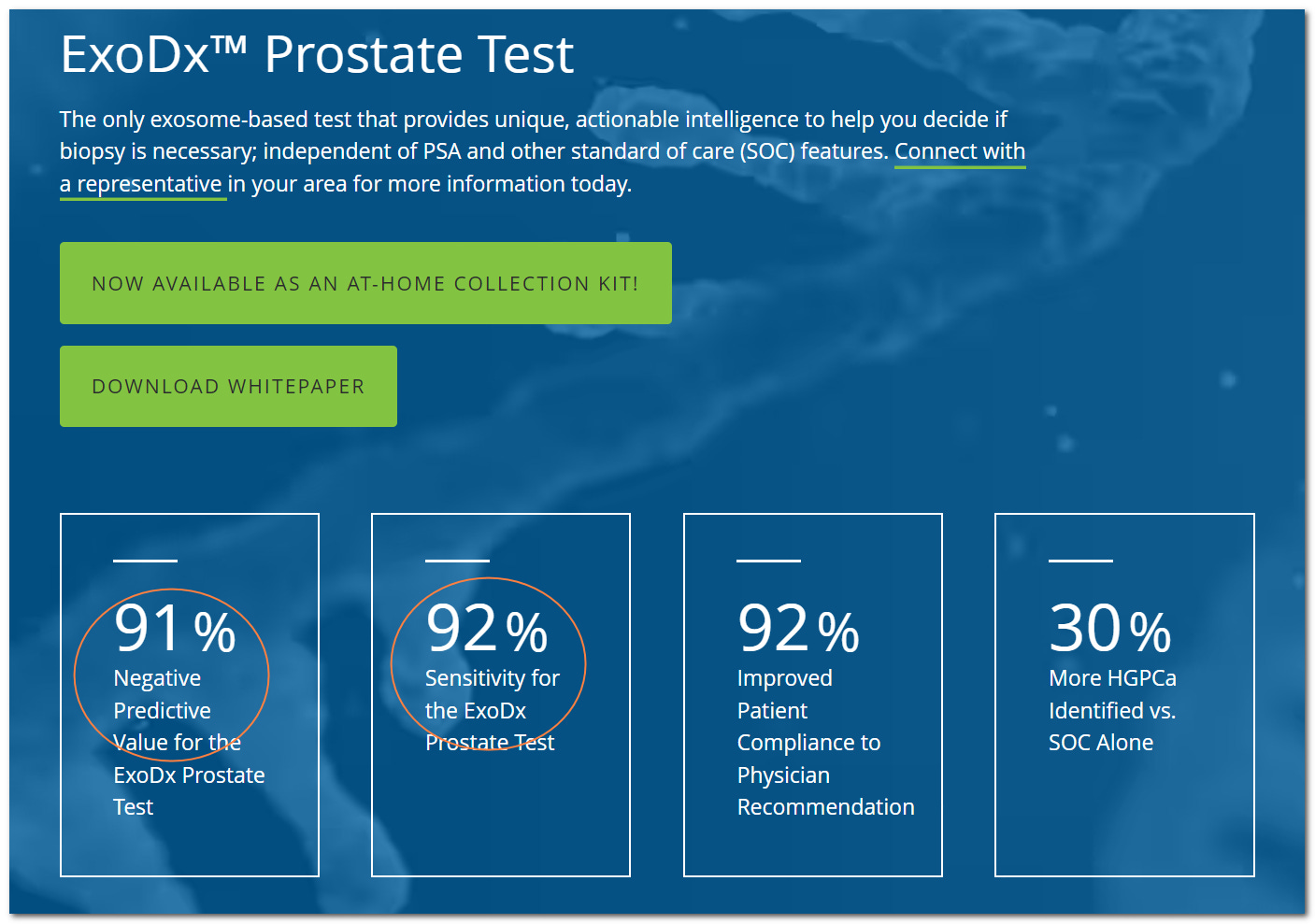

The test illustrated above is for men on active surveillance whose PSA is only slightly elevated and who wonder if they should get a biopsy. It predicts from a urine sample whether the cancer is low-grade and unlikely to be a risk, or high-grade (Gleason >= 7).

We see the test has a negative predictive value of 91%. A negative test predicts low-grade cancer; if the test returns negative the ad is saying there’s a 91% probability of the cancer being low-grade —and a 9% chance that the cancer will turn out to be high-grade.4

Since men get the test to avoid unnecessary treatment, the ad ought to also disclose positive predictive value — if the test predicts high-grade cancer, how likely is that to pan out? The ad avoids that question and lists sensitivity instead.

We can guess why the positive predictive value might be underwhelming. Imagine that the large majority of men with these modest PSAs have low-grade, not high-grade, cancer (which seems plausible). Then, even if the the test has a low false-positive rate, this large number of negative men results in a lot of false positives — and that means a positive result has less probability of being correct. Earler we saw how population statistics can improve predictive value — and here we see how it can reduce it.

Summing up

To summarize, the credibility of a test depends on two measurements.

If we’re talking about test quality in isolation, the measurements are sensitivity and specificity. An ideal test achieves 100% in both; a realistic test will need to trade off one against the other. Test developers can measure sensitivity and specificity because they already know which samples have disease.

If we’re talking about you reviewing your test result, the credibility measurements are positive and negative predictive value. Positive predictive value is the probability that if your test returns positive, the result is correct; 100% - PPV is the probability that the test was wrong and you’re negative. Negative predictive value is similar. Though it may not be obvious, the accuracy of a test’s positive and negative results are separate questions, and you want to look at both.

To be clear, I mean tests that return positive/negative results — not, for instance, whether the numbers in your blood work are accurate. When I talk about the accuracy of a PSMA PET scan, I’m thinking of just the scan; I assume it’s read by an ideal physician.

Knowing who’s actually sick and healthy requires some other, established standard of truth. So a biopsy might be the standard of truth in evaluating a PSMA PET scan.

AUC stands for “area under the curve.” The details of this curve are interesting but — the usual disclaimer — outside the scope of this blog.

The test is meant to be used in the context of other evidence.

Mason, my very dear friend -- I'm just now realizing I've never seen you lukewarm on any subject! Totally with you on this: we should keep our eyes on the prize and not be blown off-path. Happy indeed to hear from you again.

Your observation is certainly true. A sample that was collected improperly throws all these statistical niceties out the window.